Internet: Quo Vadis (Where are you going?)

Articles, blogs, and

meetings about the internet of the future are

filled with happy, positive words like "global", "uniform", and "open".

Articles, blogs, and

meetings about the internet of the future are

filled with happy, positive words like "global", "uniform", and "open".

The future internet is described in ways that seem as if taken from a late 1960’s Utopian sci-fi novel: the internet is seen as overcoming petty rivalries between countries, dissolving social rank, equalizing wealth, and bringing universal justice.

If that future is to be believed, the only obstacle standing between us and an Arcadian world of peace and harmony is that the internet does not yet reach everyone, or that network carriers are unfairly giving different treatment to different kinds of traffic, or that evil governments are erecting “Great Walls”, or that IPv6 is not yet everywhere, or that big companies are acquiring top level domains, or that encryption is not ubiquitous … The list goes on and on.

I do not agree.

I do not believe that the future internet will be a Utopia. Nor do I believe that the future internet will be like some beautiful angel, bringing peace, virtue, equality, and justice.

Instead I believe that there are strong,

probably irresistible, forces working to lock-down and partition the internet.

Instead I believe that there are strong,

probably irresistible, forces working to lock-down and partition the internet.

I believe that the future internet will be composed of “islands'.

These islands will tend to coincide with countries, cultures, or companies.

There will be barriers between these islands. And to cross those barriers there will be explicit bridges between various islands.

Network traffic that moves over these bridges will be observed, monitored, regulated, limited, and taxed.

The future internet will be used as a tool for power, control, and wealth.

And to a large degree the users of this future internet will not care about this.

This paper describes this future - a future more likely than the halcyon world painted by others.

History Repeats

Those who cannot remember the past are condemned to repeat it. - George Santayana

Although the details always differ, the general patterns of history do repeat.

Even in our modern world, travel and commerce between nations largely flows through well established portals and follows well established paths.

In the past this pattern of places-and-paths, or, by analogy, a pattern of islands-and-bridges, was even more deeply established.

And what was once unified may break apart - as did Imperial Rome.

This paper argues that the internet is moving towards this historical pattern of controlled isolation, or channeled interconnection; that over the next few years the internet will devolve into islands that follow national, corporate, and cultural boundaries; and rather than open, translucent borders, internet traffic between these islands will flow across narrow, well defined, and highly controlled, bridges.

A Short History Of The World

The idea that “It’s a small world after all” is fairly new.

For most of human history the world has been a big place, with many lands and many boundaries. Travel and commerce was a matter of distance and required crossings of oceans, rivers, and borders.

Cities and castles were located at strategic points, where highways or rivers forked, where there was

a bridge over a river, or where there was a good harbor.

Cities were walled and those walls were pierced by only a few gates.

Cities and castles were located at strategic points, where highways or rivers forked, where there was

a bridge over a river, or where there was a good harbor.

Cities were walled and those walls were pierced by only a few gates.

Our modern eyes tend to view the walled city as a quaint relic of military tensions. But there was another force at work that was at least as strong as the need for military protection: commerce, or more particularly the desire to control commerce.

I am going to draw an analogy in which I compare the internet to the world, particularly the world of Europe, as it existed prior to the year 1800. I am going to compare the flow of commerce to the flow of data packets. This may seem a long reach. However, much of the commerce of today is manifested as flows of data packets. And the desire for opportunity and control applies as much to the flow of packets as it does to commerce.

If we look back through the centuries we see that the world was usually carved into geographic islands: baronies, kingdoms, and empires. These islands merged and split, they competed, they fought, and they conspired.

Yet despite this fragmented world there was at least one constant:

commerce between these islands was channeled, regulated, and taxed.

Yet despite this fragmented world there was at least one constant:

commerce between these islands was channeled, regulated, and taxed.

If you controlled a bridge over a river, or just a good ford across that river, you had a lever through which you could obtain authority, power, and wealth.

If you controlled a town at a seaport, at junction of roads or rivers, or at a place where trade fairs were held; you also had a power.

Towns, cities, castles tended to form where natural or man-made traffic corridors came together. New York city came to dominate its rivals, Boston and Philadelphia, because New York City combines a good harbor with mountain-free access via the Hudson and Mohawk rivers to the Mississippi basin.

We tend to view the walls around medieval cities as forms of protection; yet those walls, and especially the gates through those walls, were just as important as channels through which all commerce into and out of the city had to flow. Every gate had a functionary to levy and collect taxes and duties.

Cities became exchange points where middle-men, merchants, traded and exchanged, exported and imported. Goods generated in a city or in its tributary regions were accumulated in that city and then exported. Goods generated elsewhere were imported into a city where they were consumed locally or sent out to that city’s hinterlands.

This pattern exists today: For example, goods built in China are accumulated in Chinese cities that have good seaport and airport facilities. Those goods arrive in US west coast cities such as Los Angeles and Seattle where duties and taxies are levied and trademark rights enforced. The goods are then disseminated into the US.

It should not come as a surprise that the internet of today takes a similar pattern. Internet Service Providers (ISPs) are like the regions that generate and consume goods and services while ISP peering/transit points are the like port cities.

There Is No “Official” Internet

The internet grew out of a rejection of the inflexible world of the telcos where every

innovation, every change had to be approved by a central authority.

The internet grew out of a rejection of the inflexible world of the telcos where every

innovation, every change had to be approved by a central authority.

The internet is founded on a different idea: The idea of innovation at the edges, that the core network should provide just the minimum functionality needed to allow interesting things to happen, grow, and evolve at the edges.

The internet is held together by voluntary choices.

There is no internet Ma Bell; there is no lord-of-the-internet that has waved its golden scepter and designated any particular part of the internet as official, mandatory, and inviolable.

The internet has no official Domain Name System; there is no official IP address space. There is not even an official set of protocol parameters or protocol definitions.

There is, however, a dominant domain name system (DNS), there is a dominant IP address space, there is a dominant set of protocol parameters, and there is a dominant set of internet protocol definitions.

This dominance comes from the inertia of history and user acceptance.

Anyone can chose to create and use their own DNS or their own separate IP address space, or use their own protocol numbers or protocol definitions. But the result is often isolation.

But some people want that isolation. And if they are willing to accept the consequent separation they are free to do these things.

In other words, if a person or group or corporation or country wants to build an internet island, it is free to do so. But if it does, it will have to construct bridges to the rest of us.

In fact there are groups on the internet that have started down this path. There is the Tor system of “onion” routers. There is the “Darknet”. And there are isolated networks operated by military establishments and public utilities.

Many corporations require their employees to use isolated VLANs (Virtual Local Area Networks) when they travel.

And with the rise of cloud computing, virtual machines, and “containers” Software Defined Networking (SDN) are being used to create what amount to nearly complete, private overlay internets.

Over-the-Top (OTT) content delivery nets are starting to use the internet as but one way among many of delivering digital products.

These are not necessarily full islands, but they certainly are peninsulas with strong walls across a clear and narrow path to the internet-at-large.

But what happens if lots of people and corporations and countries begin to wonder whether they ought to build their own internet island and control access over some well defined bridges?

At First Many Separate Networks, Then A Network of Networks

The late 1970’s and 1980’s were a period of many networks.

Everybody had their own flavor.

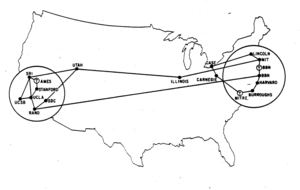

There was Usenet, there was Bitnet, there was DECnet, there was MFEnet. And there were many more, including the ARPAnet.

The ARPAnet was a single network. It was a mesh of packet switches ("IMP"s).

Host computers attached to the IMPs and operated under a thing called

the Network Control Program (or Protocol) - NCP.

The ARPAnet was a single network. It was a mesh of packet switches ("IMP"s).

Host computers attached to the IMPs and operated under a thing called

the Network Control Program (or Protocol) - NCP.

In this world of many networks there was one particular application that spanned them all: electronic mail. Ad hoc email bridges were constructed between networks. Some very strange address and format transformations were required. For instance: IBM PROFs email was carried over BiSync as if it were a deck of 80 column punched cards. And Usenet email addresses were sequences of relay computers needed to get the email from hither to yon. My own was decvax!ucbvax!asylum!karl

Various mapping tools were created to hide these inter-system differences. Ultimately we settled on the “name@hostname” form of address and most of the networks converged on a terminal-like protocol that evolved into today’s SMTP.

As the internet protocols - TCP, and more importantly IP, developed to replace NCP a new notion evolved: This was the notion of a “catnet” or “internet” - a larger network formed by using smaller IP based networks as building blocks.

These smaller networks could differ from one another as long as they each carried IP packets and each formed a block of IP addresses distinct from the addresses used in any other block. These smaller networks became known as “autonomous systems” and the blocks of address as “subnets” or “prefixes”. These autonomous systems and address blocks form the foundation of the packet routing systems of today’s internet.

Because this new open internet system was easier to expand and easier for groups to join, the other networks begin to drop their technology differences and re-hosted their applications onto TCP and IP. This began first with the academic and research networks and ended as the big vendor networks - DecNET, IPX/Netware, and even SNA decided that it was better to switch than to fight.

This evolution may seem somewhat abstract. However, these changes in our way of thinking allowed the internet to grow to include networks of many different technologies - Ethernet, LTE, X.25, avian carrier, Frame Relay.

Individually operated nets based on these diverse technologies could be joined into a system in which IP packets could travel across many of these smaller networks as those packets found their way from a source to a destination and then back again.

This evolution is not finished. In recent years mobile networks - such as Verizon and AT&T - have changed from being largely isolated gardens to being gardens that are sufficiently well merged into the internet that their users continue to pay their subscription fees.

The DNA of internet technology contains many old and deeply embedded genes regarding ways to handle a multiplicity of networking methods and for bridging across the divides. It should not be surprising then that the internet is able to evolve from its current form - a network of networks - into a network of internets.

The Dream of the Universal Internet

This ability to seamlessly transport IP packets across a mesh of smaller, autonomous networks, came to be known as the end-to-end principle. It is this principle that allowed the internet to evolve as a relatively dumb packet forwarding network core surrounded by smart edges - That allowed innovation to occur at the edges without change to the core network of networks. This architecture also allowed the inner parts - those autonomous systems - to change and evolve and grow into today’s network providers without forcing the edges to change and conform.

Now, in 2016, that architecture is running into some limits. The 32-bit IPv4 address space is inadequate to provide enough addresses for the internet as it grows further. Starting in the early 1990’s several design alternatives were put forth to expand this address space. The result is IPv6. A lot of hope is being invested into IPv6.

The Dream of the Universal Internet Is Not Gone. But It Has Changed.

Once the internet dream was of unfettered flows of IP packets from a source IP address to a destination IP address.

That dream has changed.

The changed dream no longer cares whether there is a singular grand unified IP address space with unvexed packet flows.

The changed dream is a hope that “My App works wherever I am and on whatever device I am using.”

The old dream cared about packets; the changed dream cares about Apps and HTTP/HTTPS based web browsers (and their cousins, RESTFUL APIs.)

Monetize, Monetize, and Then Monetize Again - There’s Gold In Them Thar Bridges

The internet has been, and will continue to be a huge gold mine.

There’s money to be made transporting data.

There’s money to be made blocking data.

There’s money to be made in regulating data.

There’s money to be made by monitoring data, whether that monitoring is to build search-engine indices, to know who is talking to whom, or simply to charge more money for certain kinds of traffic.

But to make really big money one needs to be a place where those flows of data are concentrated. And what better place to stand than on a bridge where large volumes of traffic must cross?

In Theory, Theory And Practice Are The Same. In Practice They Are Not.

The dream of the Universal internet has run into some practical difficulties.

Unfortunately, at the IP packet layer IPv6 is more of a parallel internet rather than an an extension. That means that IPv6 is more of a replacement of the existing internet than it is an upgrade. So existing IPv4 based systems can interact with IPv6 based systems only via various contrivances.

Although they go by many names, these contrivances, each in its own way, are a kind of bridge between an IPv4 world and an IPv6 world.

The “curse of the installed base” is quite real.

There is a prodigious investment in existing IPv4 equipment and knowledge. That creates a resistance to wholesale replacement of the those working IPv4 systems.

For large providers and companies some, or even most, of this replacement will be in the form of reasonably inexpensive software upgrades to already owned equipment or will occur as part of a routine depreciate-then-replace cycle.

However, at the level of small businesses and consumers, the old equipment will often have to be scrapped and replaced with new. This is particularly true of appliances such as printers, security cameras, battery-backed continuous power systems, file servers, phone switches, and back-office machines.

In some large number of cases the cost of learning how to use this new equipment might be larger than the cost of the gear itself.

In addition, many vendors who are selling IPv4 based products are happy to continue to make profits from their IPv4 investments and view IPv6 as fixing something that doesn’t seem, to them, to be broken.

Architectural Elegance Collides With The Reality Of Invention

Application Level Gateways (ALGs) - also known as “Proxies” - have been around since the beginning of the net. Email forwarding is a classic example of an how ALGs can be used to move email across administrative boundaries - for example via SMTP/SMTPS forwarders between Google’s Gmail and any number of private and corporate email systems.

Network Address Translation (NAT) is newer. And its existence offends many internet purists. Indeed one of the most often heard arguments made in favor of IPv6 over IPv4 is that IPv6 will allow the internet to be swept clean of architecturally non-aesthetic NATs.

Private VLANs are often private, encrypted, internet segments that overlay or tunnel through the more prosaic internet as if it were simply some wires.

And the evolving area of Software Defined Networking (SDN) presents a new fangled way of defining private overlay internets on top of the regular internet.

Network Address Translators (NATs)

NATs allow users to deploy an address block at the edge of the net - using a “private” chunk of IPv4 address space and consuming only one address of “public” IPv4 address space.

Usage of NATs has exploded. NATs are found everywhere. Nearly every home or small business (and a fair number of larger businesses) in America and around the world uses a NAT.

NATs do break the IP packet view of the end-to-end principle because they alter the contents of packets, in particular they alter the addresses of packets. That causes certain troubles because NATs have to do a lot of jugging of 16-bit TCP and UDP port numbers. And port number space is limited: 65,536 may seem like a large number to we humans, but for a NAT that can be a constrainingly small pool of available port numbers and a limit on how far NATs - small or “carrier grade” - can scale.

In addition, NAT’s play havoc with protocols that carry IP addresses as data. But that problem lands largely on a couple of protocols, such as FTP, SDP, and SIP. Other protocols, such as HTTP/HTTPS, are not burdened with this difficulty.

There is no denying that NATs are architecturally ugly, violate the end-to-end principle, and do not scale well. But there is also no denying that they have often proven useful, reliable, and adequately efficient.

It is useful to observe that for many applications HTTP and HTTPS are the transport protocol of the internet, not TCP. And traffic carried by HTTP/HTTPS is readily relayed via proxies across address space, protocol-version, or other kinds of internet boundaries. In other words, HTTP/HTTPS, and the prodigious number of applications layered on top of HTTP/HTTPS can work in in an island-and-bridge network of internets.

Even if technically ugly, NATs have forcefully demonstrated that the internet can run acceptably well with re-use of IP address spaces and that most internet protocols and applications can work across NATs with acceptable performance.

Although NATs are not security devices they are in the accord with the security principle that one should have multiple boundaries between the intruder (Prince Charming or a black-hat hacker) and the object being protected (the Damsel in Distress or a file filled with user passwords).

NATs may not offer strong walls of protection, but they do add an obstacle. It may be an obstacle that is easily overcome, or not. A NAT is like a moat that the Big Bad Wolf must cross before he can attempt to huff and puff to blow the pigs' houses down. Perhaps the Wolf has a boat, perhaps not.

Application Level Gateways (ALGs)

There is another technology that has grown along with NATs, but which gets less attention - Application Level Gateways (ALGs), often called “Proxies”.

Sometimes they are called “middleboxes” because they act as a kind of “man-in-the-middle”. That phrase is often used as a pejorative by technical purists. But throughout history middlemen have proved to be valuable intermediaries; and being a middleman merchant has often proven to be a role leading to wealth and power.

ALGs can be either transparent or explicit, but in either case they present themselves to the user’s application in lieu of a more distant network service.

ALGs exist at the application layer. ALG’s operate as relays between two distinct transport domains. By this I mean that they act in a server role as a transport (TCP) peer to the user’s client. And simultaneously they act in a client role as a transport peer to the distant service that the user’s application is trying to reach. The ALG relays the application service between these two transport domains.

ALG’s provide opportunities for data caching (for efficiency) and data modification (loved by certain commercial groups, but not necessarily so loved by users.)

There is another aspect of ALGs that make them particularly attractive to those interested in security or data mining. Because ALGs exist at the application level they can intercept, observe, and even alter application level data in a way that would be very difficult to do in a NAT or firewall that tends to view traffic through a packet-at-a-time peephole. Even “deep packet inspection” gear has to do a lot of work to pretend to be an application end point without interfering with the actual application traffic. ALGs, because they are expected to relay application data are easier to build, more efficient than, and less likely to cause service problems than deep packet inspection engines.

Many people try to prevent data inspection (or alteration) by intermediate ALGs by using end-to-end encryption.

But true end-to-end can often be hard when application encryption is TLS based (such as when when using HTTPS. In that case an intermediary ALG often can simply use a valid certificate from a well known, legitimate certificate authority. Few client user applications validate certificate chains beyond testing that they descend from a known authority. And when an ALG is deployed by the real, remote service then the certificate used by the ALG can be indistinguishable from one that would be used by a true end-to-end peer.

But What About Efficiency?

A lot of mud has been thrown at NATs (including “Carrier Grade NATs”) and ALGs.

Some is deserved: NATS (carrier grade or otherwise) do not scale well.

And both NATs and ALGs add end-to-end delay. In the case of NATs those delays are a few microseconds, which is not enough to make any real difference in the internet world of multiple millisecond round-trip-times.

ALGs can in their worst case add an entire transaction fetch time (but usually it can be much less.)

On the other hand, ALGs can also act as caches thus reducing the time for the second and subsequent fetch of that same material to zero.

For HTTP/HTTPS traffic TCP/TLS connection setup overhead is often a major, even dominant, factor in the user perception of response time. To the degree that ALG’s allow re-use of already open connections they may actually improve the user perception of responsiveness in some situations.

All-in-all, the issues of computational and response-time efficiency of NATs and ALGs are complex and the answers will very much depend on the specific facts of each separate case. In other words, it is hard to make broad predictions one way or the other.

However, the issue of efficiency may be a distraction. No one seems bothered by the rather significant performance cost of HTTPS relative to HTTP, or by the inefficiency of VLAN and SDN overlay networks. And on the world-wide-web the massive use of “analytics” trackers adds so much overhead to a typical web page that other kinds of inefficiency can be vanishingly small by comparison.

Weak Glue

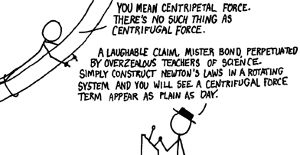

The centripetal forces that hold the internet together are weak.

The main force holding the internet together is simple inertia - people don't tend to change things that work.

And up to now the internet has worked pretty well.

The main force holding the internet together is simple inertia - people don't tend to change things that work.

And up to now the internet has worked pretty well.

Those words “up to now” are important; certain groups, such as countries, cultural groups, religions, and certain commercial groups are starting to show signs of discontent. And at the risk of excessive foreshadowing, these groups are ones around which I expect the pieces of a fragmented internet to coalesce.

For instance, up to now there have been some aspects of the internet that have worked exceptionally well. For example, the operators of the Domain Name System roots have provided superb service; and they have done so largely without outside oversight. But domain name servers, root or otherwise, are an attractive point for those who wish to do real-time monitoring or regulation of what is happening on the internet - See my note What Could You Do With Your Own Root Server?

Internet standards from the IETF, W3C, and IEEE are voluntary. The main force holding people to them is that if they are not followed - or at least not followed in rough, approximate terms - then it may be hard to exchange packets with others unless they too diverge from the standards in similar ways. This permission to differ has value: Many of today’s most popular protocols, indeed the entire concept of the world-wide-web, came about through experimentation by users outside of the internet standards bodies.

It used to be hard to build a network, or a network of networks, i.e. an internet. Today anyone with enough money can buy the pieces and build a usable internet that is distinct from the public internet. It may even use its own IPv4 or IPv6 address space, or use its own domain name roots and servers.

The users of such a private internet may find themselves feeling somewhat isolated - or insulated. But that’s not always a negative. They may desire that isolation; they may feel comfortably insulated; they might be quite happy reaching “the outside” through pathways that are well defined, easily controlled, and easily defended.

This is not a fantasy - there do exist dark and private networks that take pride in their separateness.

IPv6 - Glue or Lubricant?

IPv6 is not directly interoperable with IPv4. An IPv6 based internet would not be an extension of the classic, legacy IPv4 internet of today. An IPv6 based internet would, instead, be a replacement internet.

There will be no flag day on which everyone switches to IPv6.

So the IPv4 and IPv6 internets will need to coexist and interoperate for a long time into the future.

Even if the internet is not further fragmented, the IPv4 and IPv6 internets form two distinct islands.

In other words, the internet has already been fragmented along the IPv4-IPv6 boundary.

Several bridging measures have been developed to intermediate between IPv4 and IPv6 devices. These measures have been somewhat successful at masking - but not eliminating - the gap.

Many newer devices are “dual-stacked”. In other words they speak both IPv4 and IPv6. For each connection a choice is made whether to use the IPv4 internet or the IPv6 internet for that particular connection.

Dual stacked devices are like those power bricks that can be plugged into European 220 volt outlets or US 110 volt outlets. The fact that those power bricks can operate using either European or American utility power does not mean that the European and American power grids have merged and become one. Similarly, the use of dual stack devices does not mean that the IPv4 and IPv6 internets have merged and become one.

IPv6 was conceived as a way to maintain a grand, global, unified internet address space. However, the fact that IPv6 requires us to deploy intermediaries to reach legacy IPv4 devices legitimizes the presence of intermediaries in the internet.

Once we come to accept intermediary devices as normal and routine, we have take a big step towards an island and bridge internet.

Address Assignment Bureaucracies May Be Seen As A Nuisance

Except for NAT-ed edge/stub networks - most IPv4 and IPv6 networks obtain their address space from a worldwide pool. Allocation procedures often require payments of money, demonstrations of intended use, partial allocations, and then subsequent partial allocations only after follow-ups showing that the usage plan is being followed.

It is not a big mental leap for a network operator to say to itself: “Hmmm, if a large part of my traffic already has to pass through proxies and other intermediary devices then why should I bother to obtain addresses from the worldwide pool? Perhaps I can simply pass all of my traffic, whether IPv4 or IPv6, through proxies? Then I can self-assign IPv4 or IPv6 addresses to myself and avoid expensive and intrusive snooping by the address assignment bureaucracies.”

Forces of Fragmentation

The advent of the World Wide Web in the mid 1990’s increased the incentive for a global, unified internet.

Today that incentive has been supplemented by counter-incentives to splinter the internet.

This is not to say that the desire of web users to perceive a seamless mesh of web pages has gone away.

Rather, it is that technology has evolved so that perception can be adequately maintained even when there are

boundaries and barriers on the internet.

This is not to say that the desire of web users to perceive a seamless mesh of web pages has gone away.

Rather, it is that technology has evolved so that perception can be adequately maintained even when there are

boundaries and barriers on the internet.

Perception is important: if the internet can be fragmented without violating the users' perception of wholeness, then there is little that is left to the argument that there must be one glorious, unified, global IP address space with unvexed end-to-end flows of packets.

One may conclude that none of these forces are strong or sufficient drives for fragmentation. However, in combination these forces cumulate and cause the formation of small cracks in the unity of the internet.

Small cracks are harbingers. Small cracks can become rifts.

So, what are so some of these forces of fragmentation?

Nobody Is Feeling Secure

Almost every day the news has yet another report of a data breach or a security vulnerability.

Fear that systems are insecure is driving change.

Fear that systems are insecure is driving change.

The singularity of today’s domain name system makes it a very obvious single point of internet failure as well as a single point of attack. There’s new interest in decentralizing DNS or replacing it with a system without a single point of failure, or single point of control.

It was presumed that newer equipment is more secure. And to a large degree that is true. However, the news reports keep telling us that even the latest and greatest of software, computers, and things containing computers, such as mobile phones, are vulnerable.

People and institutions are beginning to perceive that in order to be safe they need to disconnect, at least in part, from the internet. The are deciding that their critical systems need to be “air gapped”. And that their other systems should be separated from the main internet by high and thick barriers.

In other words, operators of computer networks and computer systems are beginning to think like mediaeval town-dwellers: they want a wall around their community.

Network firewalls have been around for a long time. This new movement is something more intense, something in which “being separate” ranks higher than “being connected”. If a firewall were a moat over which there are protected bridges, this new thing is a more like a moat with alligators and a only a single boat that is under the strict control of the warden.

Lifeline Grade Internet Utility

This drive to separate and isolate for security becomes even more intense as the net becomes a lifeline grade utility upon which human health and safety depend.

The drive to control risk will push the deployment of barriers in the internet; over time those barriers become the gaps that separate internet islands.

The Rise of the App

Since the iPhone came out in 2007 we have seen a new wave of internet tools: Apps.

Since the iPhone came out in 2007 we have seen a new wave of internet tools: Apps.

Apps can be utterly blind to boundaries. Or they can honor boundaries if the provider of an App perceives that doing so would be of commercial or competitive value.

Users care that their Apps work; they neither see nor care about the elegance (or ugliness) of the underlying connectivity plumbing.

App-specific handles and URLs are the new internet addresses. DNS names are becoming less and less visible to users or are transformed into non-mnemonic “short” forms.

The end-to-end principle, if it still has any life, now means that users can use their Apps wherever they happen to be at any given moment.

The great majority of Apps use only a very few internet protocols, most particularly HTTP/HTTPS, SMTP/SMTPS, DNS, SIP.

Every one of these protocols is easily proxied.

Every one of these protocols could work well in an island-and-bridge network of internets.

Pay And Obey: Getting Tired Of Rent Payments?

Certain internet resources, most particularly IP address space and domain names, are managed by regulatory bodies (such as the Regional IP address Registries (RIRs) and ICANN). These resources are obtained only through a process that involves the payment of recurrent rent and the signing of a restrictive contract.

Businesses are beginning to recognize that this is a situation that brings risks and costs. If a business misses a step in the regulatory dance it may discover that it has lost those internet resources upon which it depends. This has engendered a new layer of services, often quite expensive services, through which corporations and trademark holders outsource management of domain names in the face of the never ending and ever changing set of ICANN notices, contact verifications, mandatory periodic registration renewals.

And because domain names are rented for no more than ten years at a time we are starting to discover that we are on the verge of losing large chunks human creativity: As people die or otherwise fail pay the rental fees of domain names the websites behind those domain names are disappearing. When historians come to write the story of the internet era they may find that the raw material has eroded away.

Regressive, and largely arbitrary, regulatory policies over internet resources will drive people and organizations to seek alternatives where continuity and longevity is more assured.

The islands in an island-and-bridge internet need not adhere to one world-wide uber-regulatory body. Instead each island can adopt policies that it feels appropriate for its community.

The Present Course of Internet Governance Is Beginning To Look Like It May Fail

ICANN was initially designed to be a dog wagged by its tail. In this it has succeed beyond beyond expectations; it is now a regulatory body that is expensive, highly bureaucratic, and well shaped to allow chosen economic and governmental interests who can block internet change.

(Charles Dickens must have foreseen ICANN when he wrote

Chapter 10, "Containing the whole Science of Government", in his 1857 book *Little Dorrit*.)

(Charles Dickens must have foreseen ICANN when he wrote

Chapter 10, "Containing the whole Science of Government", in his 1857 book *Little Dorrit*.)

ICANN has imposed an artificial economic system on much of the internet. There are those who are finding the ICANN system to be expensive, immutable, opposed to innovation, arbitrary, and capricious.

ICANN maintains its grasp over the internet by controlling a few key functions, most particularly the generation of a DNS root zone file image. This has suggested to several potential actors that rather than changing ICANN they could step around it by creating new, but consistent, DNS roots or even by promoting a new naming system in lieu of DNS.

In addition, ICANN has been seen by many as a symbol of United States hegemony over the internet.

We Have Already Begun To Live With A Fragmented Internet

NATs and ALGs have taught us that there is viable life within “private” address spaces.

Corporations often force their employees to use encrypted VLANs when they work from home or when traveling.

Companies such as VMware offer Software Defined Network capabilities so that customers can build large scale, private overlay networks that make it easy to fork-lift entire branch offices to new locations.

Users of very large communities, such as Facebook, often live their entire internet lives within that community. They may not realize it, but they are already living on an island. And the only bridges they care about are those needed to assure that their favorite feeds continue to work.

The internet may not yet be fragmented, but it certainly is full of cracks - and the people who create and use those cracks find them to be useful and convenient.

Making It Real

If you have stuck with with me this far you may be saying “This is all conjecture, there’s no reality to it.”

Perhaps you are right, perhaps IPv6, after trying for over two decades to gain traction, will suddenly accelerate and replace the existing IPv4 internet. And all the pressures pushing people, corporations, and countries to want isolation and protection will be answered through means yet unimagined. Perhaps.

But what if you are not right? What if the incentives toward fragmentation continue? How could this vision of islands and bridges become real?

The Islands

The islands of the new island-and-bridge world will most likely evolve out of the closed and walled gardens of today’s internet.

The first may be China.

The mobile/wireless providers - Verizon, AT&T - are already largely on their way to being islands.

Internet companies that have user communities that largely live and work within that company’s offerings, companies such as Facebook and Microsoft/Linked-In, are likely early island-builders.

Netflix Island sounds like the name of a show but it is likely to be the name of one of the early islands.

Religious and cultural groups have long maintained a degree of separation. One might anticipate that the Vatican - which has already obtained the top level domain .church in just about every known language - may carve an internet world unto itself. The Mormon church has also been active in creating its own relatively closed internet monastery.

Other islands will form around language. For example we may see internet islands forming around Arabic, possibly multiple islands to reflect religious schisms.

Another source of island building may be groups that need strong security barriers. For example, there has been a lot of chat of late about how contact with the internet increases the chance that our electrical power infrastructure might be compromised. The standard answer for these concerns has been to be “disconnect from the internet.”

The Bridges

I suspect that few people or organizations will set out with the express purpose of constructing either an island or a bridge. Rather they will just slip into it incrementally.

In the beginning the islands will tend to use the same IPv4 and IPv6 address spaces as the internet in general.

But use of a general, public address space brings a risk. The risk is that those packets that do, somehow, get past “the firewall”, will be able to roam the island, and do damage. And any return traffic that is engendered may flow outward across the firewall without constraint.

So there will be a temptation to say “let’s do more than just impose a firewall, let’s use a distinct IP address space.” NATs will be considered but will be found wanting. That, in turn, will invite application level gateway technology.

The incessant demands of law enforcement and national security in conjunction with those groups' frustration with encrypted traffic will drive the deployment of Application Level Gateway based bridges in which the various application flows will be in, or can be put into, “the clear” at some point inside the ALG.

The Steps

The changes to create an island-and-bridge network-of-internets are evolutionary and incremental; there need be no flag days or grand coordinated efforts. This is consistent with the internet’s principle of innovation at the edge of a relatively stupid packet forwarding core. The difference would be that in this case the “edge” would be applications that seek to move application specific data chunks (often called Application Data Units or ADUs) over TCP (or HTTP/S over TCP) connections. The analog to packet routers would be application proxies and Application Level Gateways.

The internet is not particularly brittle: Large commercial providers, particularly ones in the consumer space (such as Comcast and Verizon) have well learned that they can make technical changes with impunity even to seemingly fundamental things such as internet standards. Anyone who has been caught by Comcast’s Xfinity wireless mesh can see that they routinely interfere with and alter the user’s end-to-end web traffic.

Here are some of the steps to move towards an island-and-bridge network of internets. These steps need not occur in any particular sequence:

-

Disconnected DNS names, like .onion or .tor will begin to erode the belief that there shall be exactly one catholic domain name root for the entire internet.

-

DNS root systems that compete with or supplement the ICANN/NTIA/Verisign one will further erode the belief that the internet requires exactly one DNS root and elevate the idea that there can be multiple DNS systems as long as they are consistent with one another.

-

Companies or groups that are currently behind a NAT will abandon limited (and increasingly troublesome) NAT technology and switch to 100% ALGs (although they will possibly call those “firewalls”, “load balancers”, “border gateway controllers”, or just “proxies”.)

-

VPNs that span across providers will show how to deploy application across internet boundaries.

-

Software Defined Network (SDN) overlay networks with their own private address spaces will, like VPNs, begin to reveal the idea that users can move up the levels of network abstraction and build a perfectly useful net out of concatenated TCP connections.

-

Over the Top (OTT) content delivery networks that use the internet as just one of several ways to move packets will have an impact similar to that of VPNs and SDN overlays.

These changes can happen privately, quietly - until one day we wake up and realize that the internet has changed beneath us.

What About Net Neutrality?

Network Neutrality is an attempt to prevent "carriers" from discriminating on the basis of traffic types,

traffic sources, and traffic receivers.

Network Neutrality is an attempt to prevent "carriers" from discriminating on the basis of traffic types,

traffic sources, and traffic receivers.

Network Neutrality is an attempt to create a kind of “common carrier” world. In a sense N-N is trying to pump life back into the end-to-end principle.

This paper has argued that at the IP packet layer, the end-to-end principle is dying, if not already dead. I do not see that the N-N efforts are going to prevail; at best, I hope that we can prevent and dilute the worst effects of unfettered network non-neutrality.

I am an advocate of non-discrimination in the carriage of IP packets by providers. But I recognize that not all kinds of traffic are equal - conversational Voice-Over-IP (VoIP) needs low packet loss, low transit delay, and low variation in that transit delay (i.e. low jitter) for a relatively light (50 packets/second) flow of regularly spaced packets. Bulk transfers need a net that allows large sized packets, often bunched into bursts. And streaming video needs paths that can provide low loss, low jitter for flows that have periodic bursts of large packets. It makes sense for providers to recognize and accommodate these different kinds of needs.

In the island-and-bridge world, the intent is distinctly not neutral. Insularity and isolation are the opposite of the free and open world of neutrality. But despite the advocacy of network neutrality, it seems as if a lot of people, corporations, governments, religions, and cultural groups will be attracted by the ability to create walls with only a few well controlled portals.

Conclusion

The island-and-bridge internet is coming.

It’s not going to come as a tsunami; it’s going to come as a slow incoming tide.

Users of Apps will not be particularly concerned because the providers who create these islands and bridges will be driven by self-interest to provide the necessary application bridging/proxying machinery so that users are not unduly distressed, or at least not sufficiently distressed to take their data elsewhere.